MIT x Cambridge

This project was conducted under the supervision of Dr Sławomir Tadeja, Postdoctoral Associate in MIT Mechanical Engineering and researcher in the MIT Learning Engineering and Practice (LEAP) Group, where he works on learning technologies for manufacturing education. Dr Tadeja also collaborates with the Cyber-Human Lab at the University of Cambridge, through which this supervision was established.

Additional collaborators included Dr Ziling Chen, Rolando Bautista-Montesano, Yeo Jung Yoon, and Dr John Liu at MIT, with Dr Thomas Bohné serving as the Cambridge PI.

The Concept

This research investigates how symbolic vibrotactile cues can support perception, error avoidance, and precision control in smartphone-based teleoperation of a robotic arm.

Teleoperation typically suffers from the loss of tactile perception due to:

+ Latency

+ Reduced sensing

+ Camera perspective distortions

+ Uncertainty about contact and alignment

This work develops and evaluates a structured haptic cue language for a smartphone interface controlling a UR5e robotic arm. The goal is to determine whether simple, low-cost vibrotactile cues can improve performance and reduce operator workload in fine-manipulation tasks.

The system builds toward lightweight, accessible teleoperation tools for manufacturing, training, remote assistance, and task guidance.

Designed for Real-World Remote Robotics – Building Upon Previous Work

Building upon the work of Ziling et al. in the MIT LEAP Group, the teleoperation system integrates a ROS-controlled robotic arm, a custom smartphone client, and a cross-continental communication pipeline. The smartphone serves as both controller and haptic output device, enabling a single low-cost interface for perception, control, and feedback.

+ ROS Noetic framework

+ Real-time robot streaming via EGM and Protobuf over UDP

+ Symbolic haptic cues built using Apple CoreHaptics

+ Passive augmentation module improving tactile resolution

+ Integrated performance, workload, and cue-recognition metrics

The system mirrors the constraints of industrial teleoperation: network delay, limited sensing, compressed feedback, and the need for rapid situational judgement under uncertainty.

Using a Smartphone as a High-Fidelity Teleoperation Interface

The smartphone interface was designed to make robotic manipulation accessible to non-experts. It offers intuitive AR-based positioning, smooth gesture control, and immediate haptic cues that assist the user in maintaining alignment and avoiding errors during fine-manipulation tasks.

+ AR-based spatial alignment

+ Visual guidance overlays

+ Two haptic conditions: visual-only, symbolic + augmented cues

+ Real-time robot state feedback

+ Integrated safety gating and limit monitoring

User-centred design principles guided the interface architecture to ensure clarity, learnability, and responsiveness.

Symbolic Haptic Language Design

The research introduces a structured haptic language designed to be identifiable, discriminable, and semantically meaningful during robotic tasks. The cue set spans five categories:

+ Contact

+ Proximity to error/target zones

+ Error/Deviation

+ Alignment

+ Task Completion

Each tacton was defined through:

+ Temporal modulation

+ Intensity shaping

+ Distinct patterning for discriminability

Each category features distinct temporal patterns, intensities, and rhythmic structures. These cues were iteratively tested to maximise recognisability and reduce confusion among operators performing precision tasks.

A Unified Teleoperation Interface

An intuitive mobile UI was developed to guide users through alignment, movement, and precision tasks, integrating visual, tactile, and procedural cues into a single workflow.

+ Embedded instruction prompts

+ Real-time state feedback

+ Visual–haptic redundancy for error mitigation

This system was tested with 16 users in a mixed-mode approach spanning quantitative and qualitative assessment methods (see the process for more details).

Understanding the Teleoperation Landscape

A review of mobile haptics, teleoperation interfaces, and symbolic feedback systems identified gaps in cue recognisability, perceptual clarity, and the lack of validated haptic languages on smartphones. Teleoperation is increasingly used in manufacturing (see the IP investigation below), inspection, and medical robotics, yet mobile devices are understudied as haptic teleoperation tools.

Establishing the Research Scope

Following a PRISMA systematic review of the literature, the project focus became centralised on three contributions:

+ A low-cost teleoperation pipeline using consumer hardware.

+ A symbolic haptic language for mobile interfaces.

+ A passive augmentation mechanism to enhance tactile perception.

Building the Teleoperation System

A full ROS Noetic control architecture was implemented for a UR5e arm, paired with a mobile client developed in Swift using Apple’s CoreHaptics engine. Network latency, safety constraints, feedback streaming, and synchronisation were addressed through iterative testing with MIT collaborators. The task itself followed a three step process:

1. Move a small cube to an assigned slot

Selected to amplify fine-control demands and expose ambiguity in visual-only alignment.

Selected to amplify fine-control demands and expose ambiguity in visual-only alignment.

2. Move a tall cube to an assigned slot

Chosen to increase instability and collision risk, stressing proximity and deviation cues.

Chosen to increase instability and collision risk, stressing proximity and deviation cues.

3. Perform a fine-alignment placement task

Designed to reveal how haptic cues support contact interpretation under latency and limited sensing.

Designed to reveal how haptic cues support contact interpretation under latency and limited sensing.

These tasks represent controlled proxies for real industrial behaviours such as assembly, inspection, and pick-and-place operations, enabling systematic assessment of how smartphone-based haptic augmentation restores aspects of tactile intuition during remote manipulation.

Developing the Haptic Language

Cue sketching, waveform prototyping, and pilot discriminability testing guided the design.

Each pattern was constrained to be:

Each pattern was constrained to be:

+ Short (<200 ms)

+ Distinct

+ Semantically meaningful

+ Robust across users

Certain concepts (e.g., sharpness cues, 3D motion illusions) were discarded after a feasibility analysis of the Taptic Engines in common smartphones concluded that they would be impossible to encode effectively.

Experimental Design and User Evaluation

A within-subjects study (N = 16) compared two conditions:

+ Visual-only control

+ Symbolic haptic feedback

Participants completed a combined pick-and-place and peg-in-hole task using three blocks while avoiding a marked error region. Symbolic cues encoded contact, boundary proximity, alignment, and gripper state, triggered directly from the robot’s pose and system events.

Participant Demographics were Recorded and Visualised

A range of participants were gathered via the Cambridge University ecosystem, spanning genders, ages, occupations, and familiarity with similar technologies, in an effort to provide a representative sample of a given population. Acknowledging the limitation of selecting only from an environment of strong academics is critical for contextualising the overall performance observed in the experiments.

Objective Measures

Metrics captured:

+ Task Completion Time (TCT)

+ Error counts (collisions, boundary violations, misalignments)

+ Path irregularities, logged from robot pose data

Performance was analysed with GLMs for count data and repeated-measures ANOVA for timing.

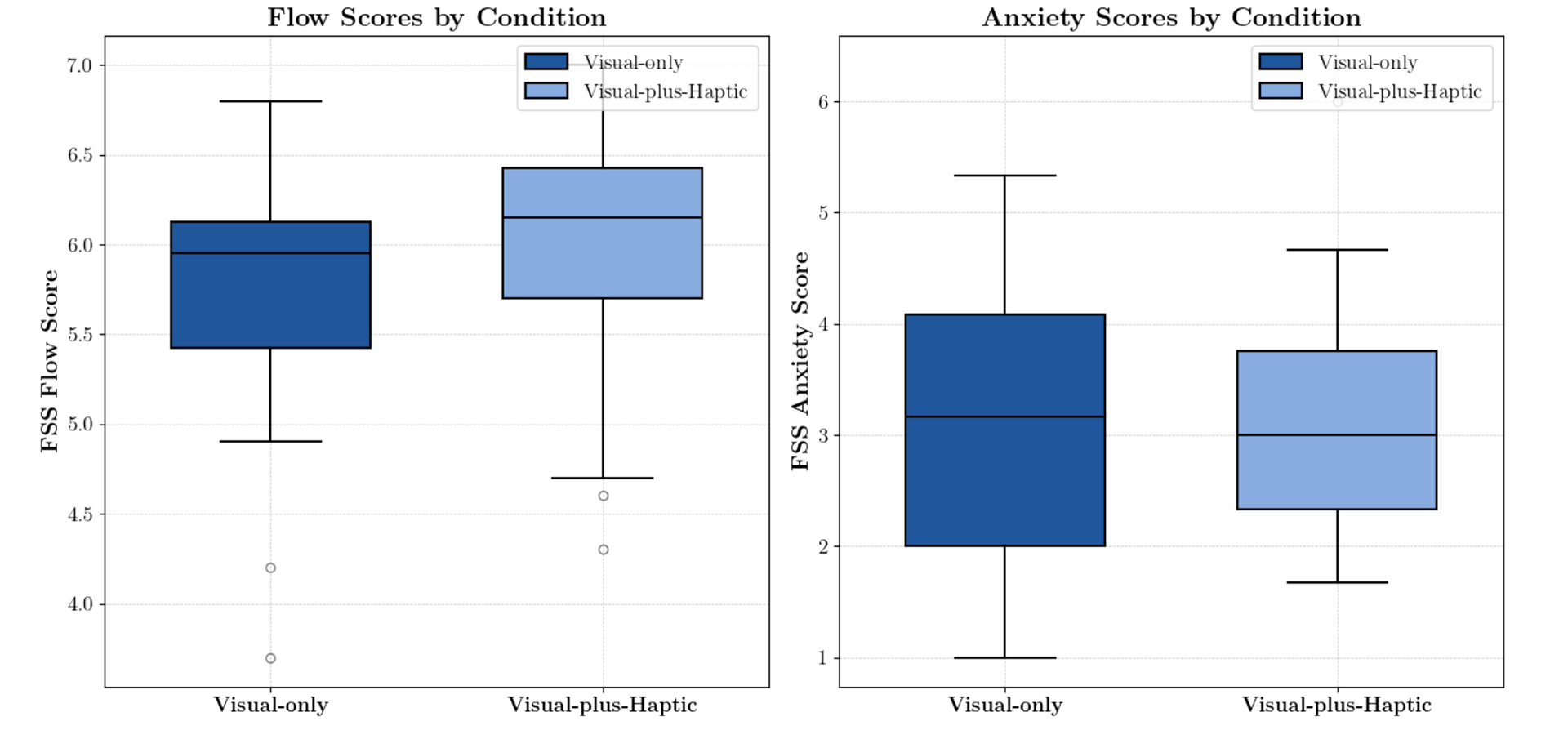

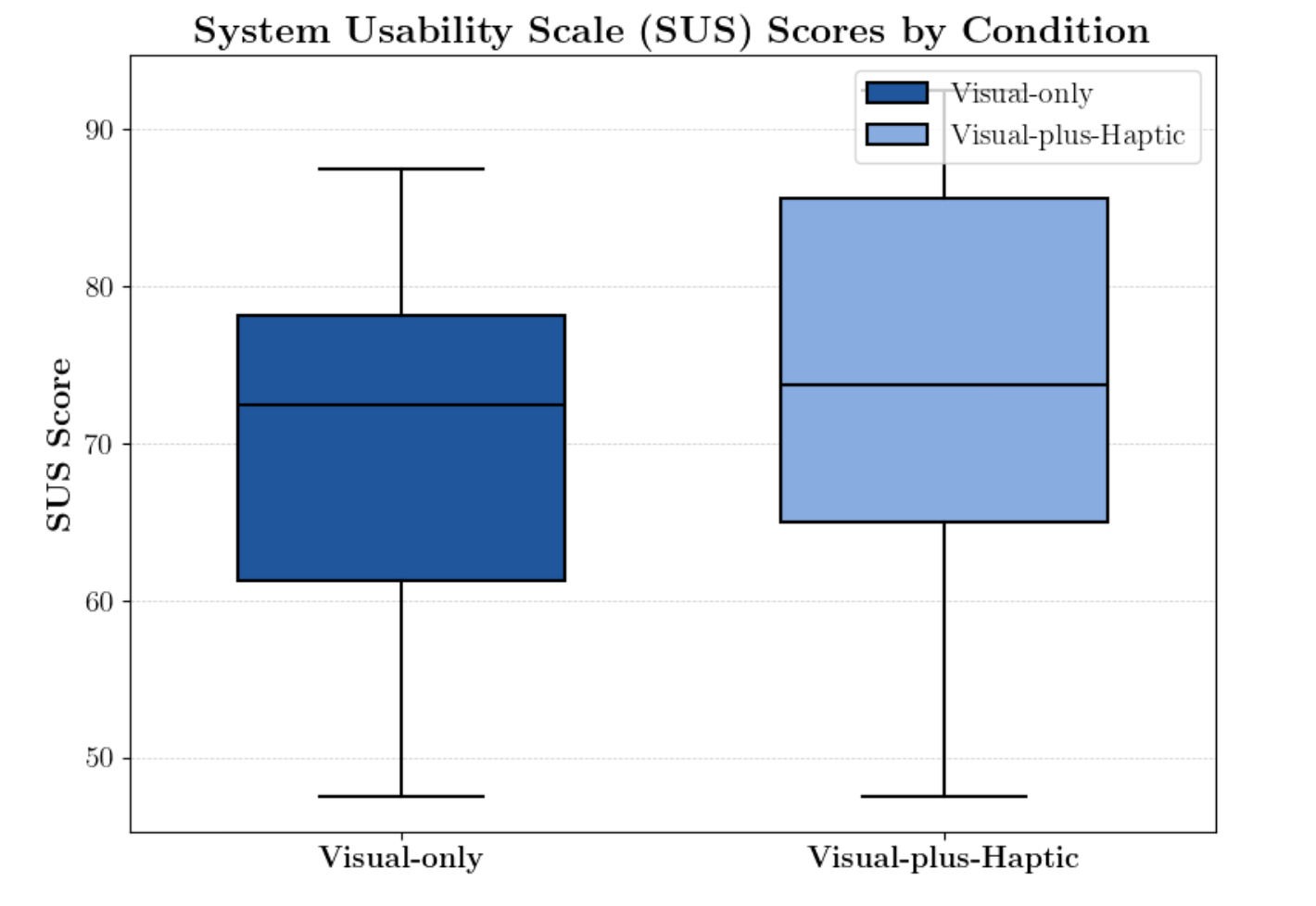

Subjective Perception and Cue Discriminability

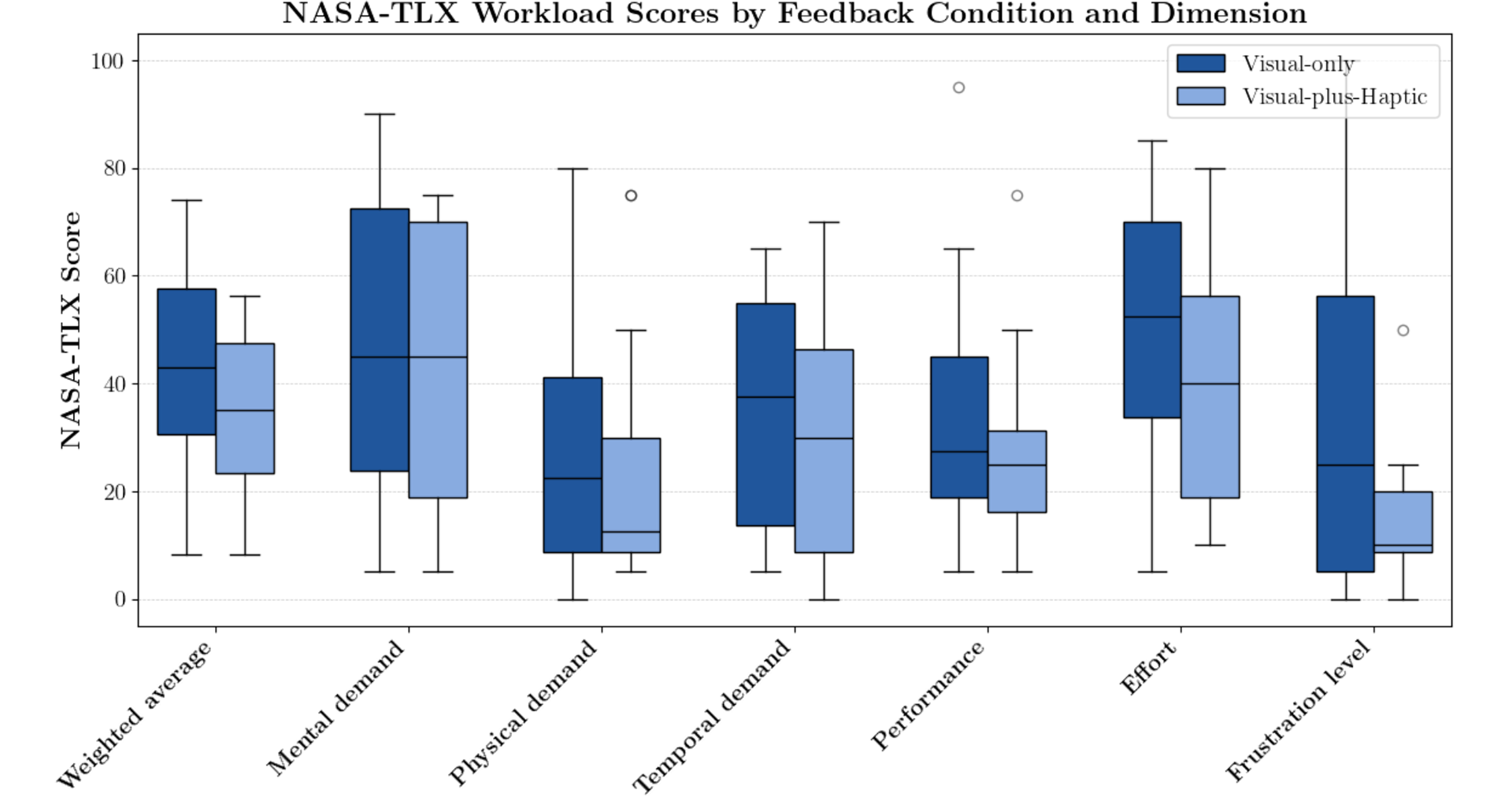

To understand operator perception, participants completed:

+ NASA-TLX workload assessment

+ SUS usability rating

+ Flow Short Scale for attention and immersion

These were accompanied by a cue recognition test evaluating discriminability of the haptic language.

Key findings:

+ Visual-plus-haptic conditions consistently reduced perceived mental demand, effort, and frustration.

+ Cue recognition accuracy was high for alignment and boundary cues, with moderate confusion for brief contact cues, informing refinements to temporal structuring.

+ Users reported stronger situational confidence and clearer interpretation of spatial relationships when haptic cues were present.

Results

Across all participants, the introduction of symbolic haptics produced measurable behavioural and perceptual gains:

+ Approx. 40 percent reduction in total errors, particularly in boundary violations and misalignments.

+ Improved precision performance, especially during peg-in-hole insertion.

+ Significant workload reduction (NASA-TLX) and consistently strong SUS usability ratings.

+ Enhanced perceptual clarity, as reflected in improved cue recognition and user interviews.

Collectively, these outcomes show that even with the limited vibrotactile bandwidth of smartphones, structured symbolic feedback can significantly improve operator accuracy, reduce uncertainty, and support more stable teleoperation behaviour

Limitations and Future Work

Key constraints include:

+ Limited temporal resolution of smartphone actuators

+ Relatively simple manipulation tasks compared to full industrial workflows

+ Controlled laboratory conditions (stable lighting, controlled latency, fixed geometry)

+ Short participant exposure and minimal training

Future work should examine:

+ Adaptive haptic vocabularies that respond to operator uncertainty

+ Multimodal feedback including audio or predictive overlays

+ Force-sensitive tasks and dynamic environments

+ Long-term learning effects

Conclusion and Implications

The results demonstrate that even with the restricted bandwidth of smartphone vibrotactile motors, structured symbolic haptics can significantly improve operator accuracy, reduce uncertainty, and lower workload in remote manipulation tasks.

This project delivers:

+ A validated smartphone-based teleoperation pipeline

+ A semantically grounded haptic cue language

+ Empirical evidence that low-cost devices can meaningfully support precision robotics

A joint Cambridge–MIT manuscript based on this work was submitted to IEEE ICRA 2026.